Presenting GINNY to FirstSource

Invited to present GINNY project to FirstSource and Honourable Minister Danny Pearson, during MoU signing with Monash University

I study long‑term human‑robot social interactions. My work integrates speech, vision, and action with social world‑model graphs, towards creating anthropomorphic humanoids. We call this project GINNY at Monash University, under which we will be publishing more work in future.

I have over two years of combined research and industry experience as a Computer Vision and AI Engineer. At Monash University, I contributed to the DARPA ANSR Project as a Research Assistant, developing autonomous perception and tracking modules for aerial simulation environments. My research focuses on social robotics and multimodal reasoning, where I work on building agentic AI systems for long-term human–robot interaction.

Prior to this, I engineered computer vision solutions at Awiros (Awidit Systems) and Aditya Infotech, deploying deep learning models for facial recognition and video analytics for Bangalore's Safe City Project. I also serve as a Teaching Associate, mentoring students in Artificial Intelligence (FIT3080) , Python programming (FIT9136) , and Software Projects (FIT3161 / FIT3162 / FIT3163 / FIT3164).

Recent updates and highlights.

Invited to present GINNY project to FirstSource and Honourable Minister Danny Pearson, during MoU signing with Monash University

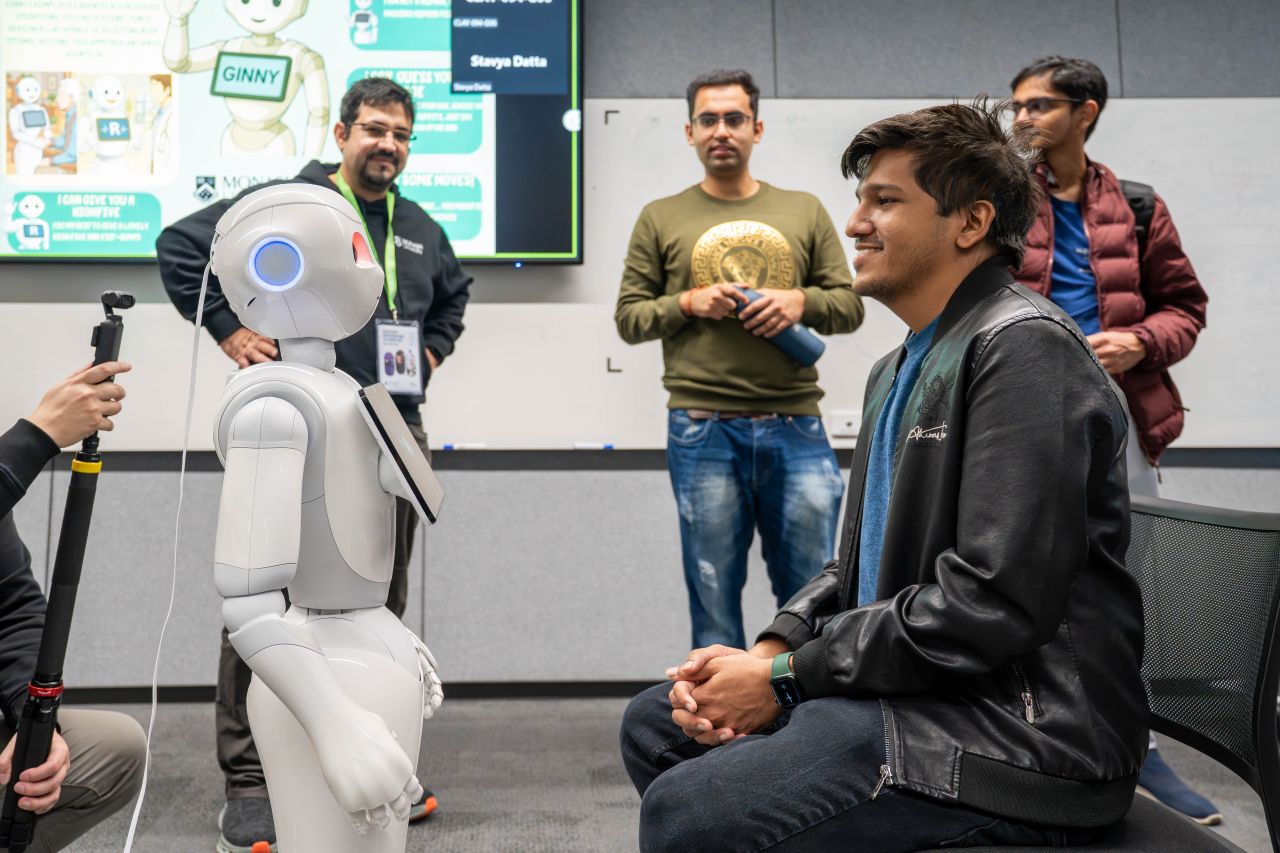

Live GINNY robot demo of Agentic Reasoning and Social World Model memory.

.png)

ARIS: Agentic and Relationship Intelligence System for Social Robots. Part of GINNY Project

Spoke on multimodal agents and social embodiment.

Demonstrated GINNY robot's social interaction capabilities to prospective students and visitors.

Working on developing agentic AI systems for social robots. Focused on long-term human–robot interactions, social reasoning, and embodiment using Pepper robot.

Teaching Artificial Intelligence (FIT3080) and Python Programming (FIT9136). Currently teaching Software Projects (FIT3161/62/63/64). Managed tutors, designed assessments, and maintained a high student satisfaction score of 93/100.

Contributed to NEUSIS framework under DARPA’s ANSR collaboration. Integrated computer vision modules and simulation environments for autonomous aerial perception and tracking.

Led the development of a no-code computer vision analytics platform smarth Dynamic AI. Oversaw annotation pipelines and designed system architectures for on-prem deployment.

Engineered facial recognition, PPE detection, and AutoML solutions deployed across major warehouses in India. Achieved 99.4% accuracy on LFW and trained multiple vision models for Awiros platform.

Worked briefly on analytics and reporting automation tasks, gaining early exposure to enterprise workflows and data systems for Cyber Security.